Education is awash with data. League tables and accountability measures have driven an obsession with headline figures, KPIs and metrics. The associated data collection, presentation and analysis processes consume time throughout the system. Do we collect too much data? Do we wait too long to act upon it?

This post is the third of our Challenges in Education series written by industry expert Dan Stucke. Here, he looks at how we can use school and trust-wide data in a more agile way to seek positive changes on outcomes on a day by day and week by week basis. Inspired by Harry Fletcher-Wood, Dan expands on his thoughts about the use of assessment data and the principles to all forms of educational data and wider school leadership. Please read his post if you’re inspired to dig deeper into his thoughts on assessment, and have a look at his excellent works.

Are we reporting, or deciding what to do next?

Harry reflects on the seminal works of Wiliam, Black and Chistodoulou to look at the difference between assessing to create a shared meaning (e.g. exam grades) and assessing to create consequence (e.g. hinge questions). The former used to report on the outcomes of a system, the latter being of far more use to decide what should happen next in the learning.

This logic can be applied to other indicators measured with data in schools.

We measure to create a shared meaning of the quality of education at a school or trust. We fixate on headline measures such as Progress 8, persistent absence and permanent exclusions that are reviewed on annual/termly bases by senior leadership teams, governors, and MAT boards. Whilst important, by the time these measures move, they indicate deep-seated issues or long-standing positive change in a school or Multi Academy Trust. They are simplistic and of questionable use for day-to-day school management.

When we measure to create a consequence, we measure to respond.

A sudden increase in the number of students arriving late to school is measured by good systems at the door each morning and backed up by an attendance team monitoring live metrics on a dashboard. The proactive team finds the issue with buses/roadworks/shops etc, and puts actions in place to improve, before the issue grows, affects AM attendance, and starts to negatively impact upon persistent absence.

Growing negative behaviour logs, detentions, and absences for a group of siblings are spotted by pastoral staff thanks to their overview of day-to-day data. A conversation with one child uncovers significant issues at home, low-cost pastoral support is put in place in school for the siblings, with this support they re-engage with school life. Missing this could have led to significant absences, deteriorating mental health, year-long waits for CAMHS assessments, even permanent exclusion.

A trust leader spots a growing number of bullying cases in a school in their MAT. A conversation with the pastoral senior leader in that school highlights the work the school has been doing to highlight all forms of bullying and train in-school friendship champions. The senior leader shares student voice feedback that demonstrates the positive impact this work has had and the fact that students now feel safer in school. The apparent increase is down to a whole school focus on the issue and a change in the way it is recorded; this is shared with other schools in the trust and leads to similar improvements elsewhere. The student voice feedback is also formalised across the trust and added to their data dashboard, allowing leaders to respond in an agile way and create a consequence when needed.

Wait for certainty, or act now?

Harry suggests that if we’re assessing to create a consequence then we should act at the first twinge of suspicion. If our suspicion turns out to be misplaced, we can always modify the next step and test our inference. We’ll never be certain, but by acting quickly and modifying our actions if necessary, we can look to make more (lower-stakes) decisions. Harry also reflects on the parallels with LFT and PCR testing during the Covid-19 pandemic, and the positive impact that LFT tests had on our handling of the pandemic, despite their inherent lack of accuracy and certainty.

As schools and MATs grow it is easy for leaders to become more distant from the day-to-day life of schools. Leaders who have good data streams, that are accurate, live, and insightful, can spend more time working with colleagues and students, taking actions that improve the overall quality of education. Trust and openness are crucial for this to work. The accountability framework that surrounds education in England and Wales means many working within the system have become obsessed with the headline measures, and perverse systems have built up around them in schools. Many teachers are tired of recording reams of assessment, attendance, behavioural data that is over-scrutinised or just completely ignored.

What should school and trust leaders do to enable this?

Automate reporting: Annual/termly reporting will still need to take place at a school and trust level to give those measures of shared understanding. Ensure you have systems in place that automate the data processing and presentation for all your key measures.

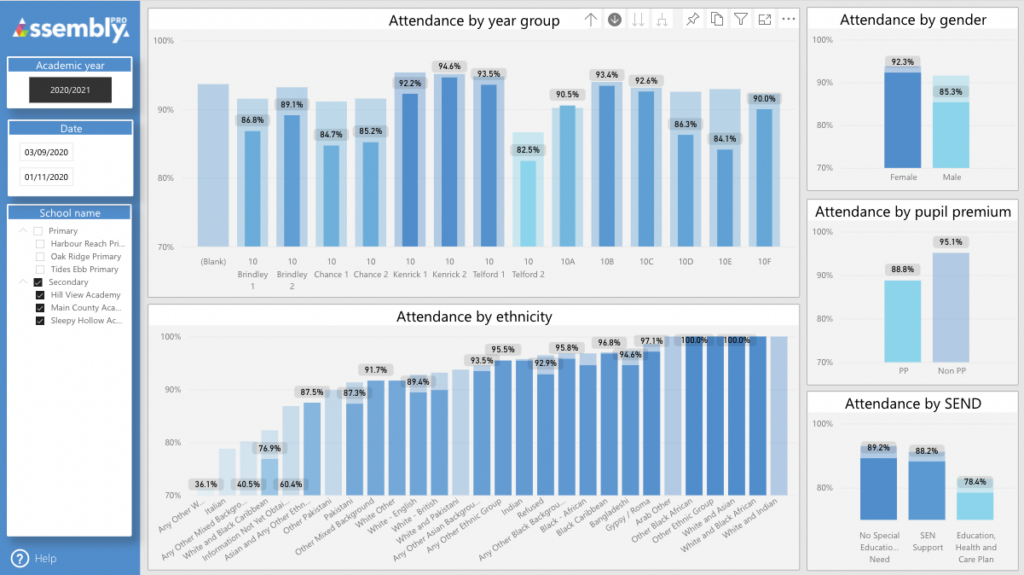

Invest in live analysis tools: Ensure that school leaders at all levels have easy access to the latest data collected, presented in a manner that allows them to draw inferences and dig down through to the student level if needed.

Combine your data sources: Your MIS will probably store characteristics, attendance and behavioural data. Assessment may be spread between your MIS and specialist tools. Safeguarding information is probably elsewhere, and so on. Combining this data together gives you a rich view of individuals and groups of students. If staff access 5 different places for each child to do this it just won’t happen, school life is too busy.

Audit and simplify your data collection: Are you collecting data that is never/rarely used? If so, why? Don’t underestimate the time spent by teachers, TAs, pastoral teams, and admin staff entering data. If it’s not going to be used and has no statutory requirements, then stop collecting it, or change your systems to gain value from it.

Change the culture around data: Remove the fear, remove the complexity, and increase time spent talking, hypothesising, doing and reflecting. Share success stories. Add live data analysis to appropriate meetings. Cut down headline reporting to minimum required levels. Don’t think about reports that you want to produce, think about the questions that you need answers to. Of course, this is all easier said than done, but lead from the front and model good practice. Train middle leaders and empower staff to spend their time removing barriers to learning and improving the quality of education. That’s what we all got into education for.

We can help

This is what our team and our Assembly Pro products do. If you would like us to work with your MAT to implement systems that give you automated reporting, live analysis tools and combined MIS and third-party data, that’s Assembly Pro in a nutshell. Everything we do is focused on turning your data into meaningful information to help you make better decisions and improve outcomes for young people. Our team can work with you to make your data systems smarter and more impactful.

Already leading the way?

If you feel your MAT is already leading the way in this area, then we’d still love to work with you. Matt Woodruff, our Vice President of Analytics & AI is leading our work as part of the Microsoft Open Education Analytics project. Please get in touch as we work together towards an exciting future moving up the value chain of analytics. Matt has written a call to arms here that explains the project.

You can also join Matt for an exclusive Analytics session at Bett 2022. Register here – places are limited!

Data Trends – News & Articles you may have missed:

- Microsoft Open Education Analytics Project:

- Ofqual’s annual report on the qualifications market came out:

- Ofqual’s plans for controls on BTECs at Level 3 are out for consultation:

- School attendance improved in February:

- Secondary accountability measures update published by the DfE & dissected by @dataeducator: